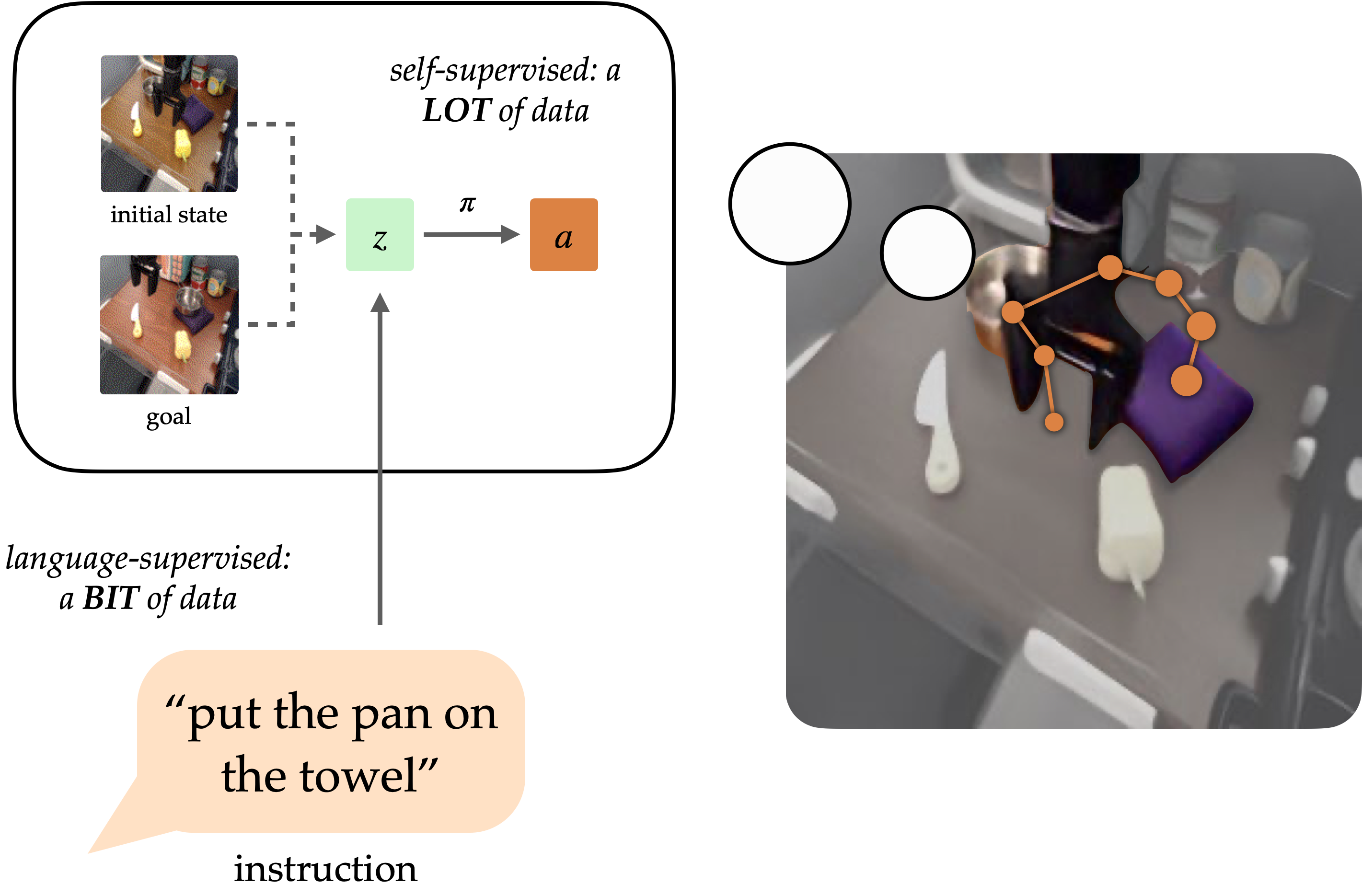

A protracted-term objective within the area of robotic studying is to create generalist brokers that may carry out duties for people. Pure language has the potential to be an easy-to-use interface for people to specify arbitrary duties, however it’s troublesome to coach robots to comply with language directions. Strategies like language-conditioned behavioral replication (LCBC) prepare insurance policies to immediately imitate professional habits conditioned on language, however require people to annotate all coaching trajectories and have poor generalization throughout eventualities and behaviors. In the meantime, current goal-conditioning strategies carry out higher on common working duties however fail to supply easy process specs for human operators. How can we reconcile the comfort of process specification by means of strategies like LCBC with the efficiency enhancements of goal conditional studying?

Conceptually, a robotic that follows directions requires two capabilities. It requires inserting verbal directions right into a bodily setting after which with the ability to carry out a sequence of actions to perform the supposed process. These options don’t should be realized end-to-end from human annotated trajectories alone, however may be realized individually from acceptable sources. Visible language materials from non-robotic sources can assist be taught the fundamentals of language and generalize to completely different directions and visible eventualities. On the identical time, unlabeled robotic trajectories can be utilized to coach robots to succeed in particular objective states, even when they don’t seem to be associated to language directions.

Modulation of visible targets (i.e., goal photographs) offers complementary advantages for coverage studying. As a type of process specification, targets are fascinating for scaling as a result of they’re free to generate submit hoc relabeling (any state reached alongside the trajectory could be a objective). This permits coaching by way of Aim Conditioned Habits Cloning (GCBC) on massive quantities of unannotated and unstructured trajectory knowledge, together with knowledge collected autonomously by the robotic itself. Targets are additionally simpler to hit as a result of, as photographs, they are often immediately in contrast pixel-by-pixel to different states.

Nonetheless, the goal isn’t as intuitive to human customers as pure language. Normally, it’s simpler for customers to explain the duty they wish to carry out than to supply a goal picture, which can require performing the duty to provide the picture anyway. By exposing the language interface of the goal situation technique, we are able to mix some great benefits of goal and language process specs to make common robots straightforward to command. Our strategy, mentioned beneath, exposes such an interface to generalize to completely different directions and eventualities utilizing visible language knowledge and enhance the bodily abilities of a robotic by digesting massive unstructured datasets.

The goal illustration of the instruction to comply with

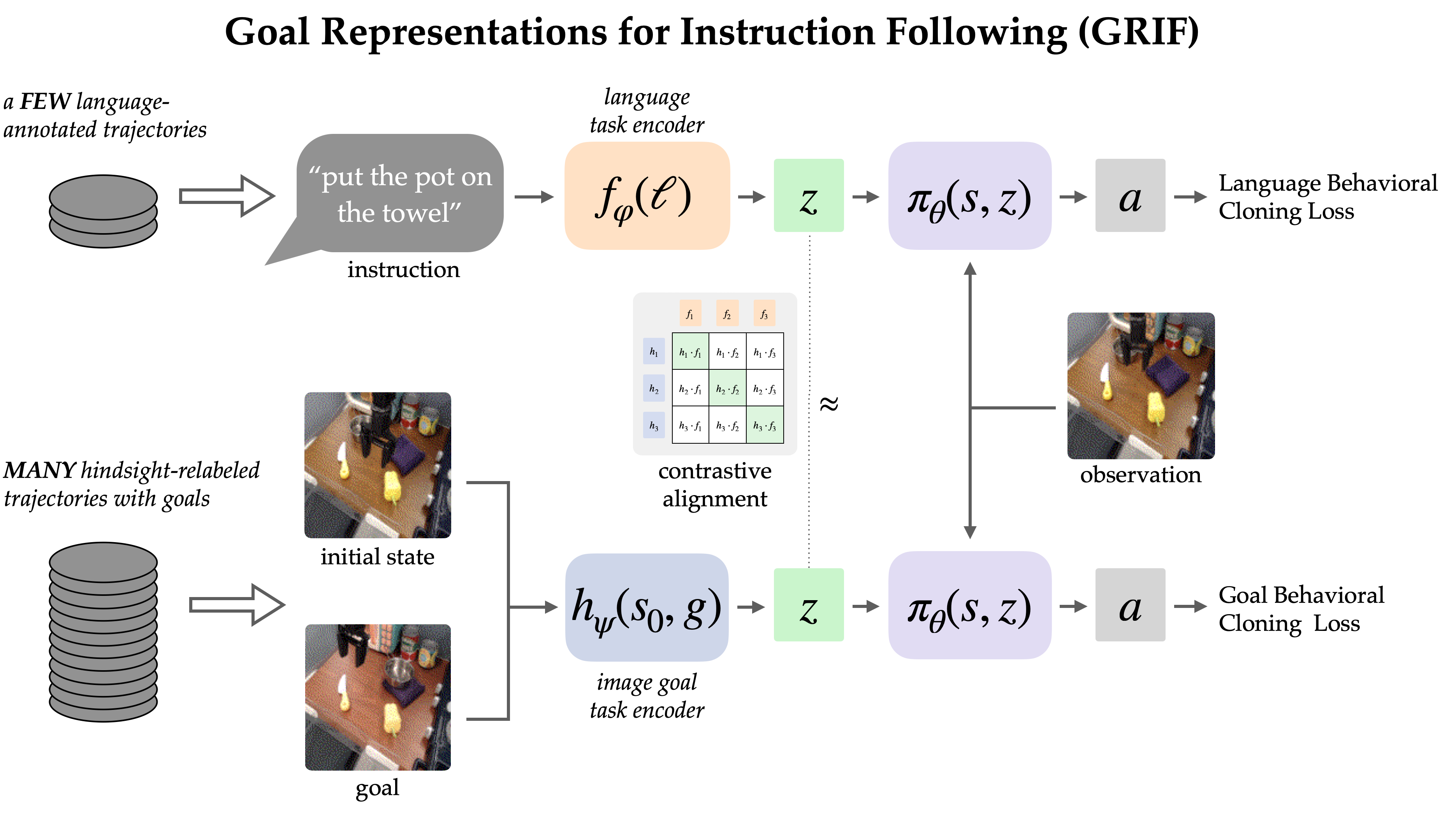

The GRIF mannequin consists of a language encoder, an object encoder, and a coverage community. The encoder maps verbal directions and goal photographs individually right into a shared process illustration area, which regulates the coverage community when predicting actions. The mannequin can successfully predict actions conditioned on language directions or goal photographs, however we primarily use target-conditioning coaching as a approach to enhance language-conditioning use instances.

our approach, Aim Illustration for Directions to Observe (GRIF), collectively prepare language- and goal-conditioned insurance policies with constant process representations. Our predominant perception is that these representations throughout languages and goal modalities enable us to successfully mix the advantages of target-conditional studying with language-conditional insurance policies. After coaching on principally unlabeled demos, the realized coverage generalizes throughout languages and eventualities.

We prepare GRIF on the Bridge-v2 dataset model, which incorporates 7k labeled demonstration trajectories and 47k unlabeled trajectories in a kitchen operation setting. Since all trajectories on this dataset have to be manually annotated by people, with the ability to immediately use the 47k trajectories with out annotation considerably improves effectivity.

To be taught from each kinds of materials, GRIF is educated with Language Conditioned Habits Clones (LCBC) and Aim Conditioned Habits Clones (GCBC). The labeled dataset incorporates language and goal process specs, so we use it to oversee language and goal situation predictions (i.e., LCBC and GCBC). Unlabeled datasets solely include targets and are utilized in GCBC. The distinction between LCBC and GCBC is solely a matter of choosing a process illustration from the corresponding encoder, which is handed into the shared coverage community to foretell actions.

With shared coverage networks, we are able to anticipate some enhancements utilizing unlabeled datasets for goal situation coaching. Nonetheless, GRIF permits stronger switch between the 2 modes by recognizing that sure language instructions and goal photographs specify the identical habits. Specifically, we exploit this construction by requiring language and goal representations of the identical semantic process to be comparable. Assuming that this construction holds, unlabeled materials also can profit language conditioning methods as a result of the goal illustration approximates the illustration of the lacking instruction.

Adjustment by means of comparative studying

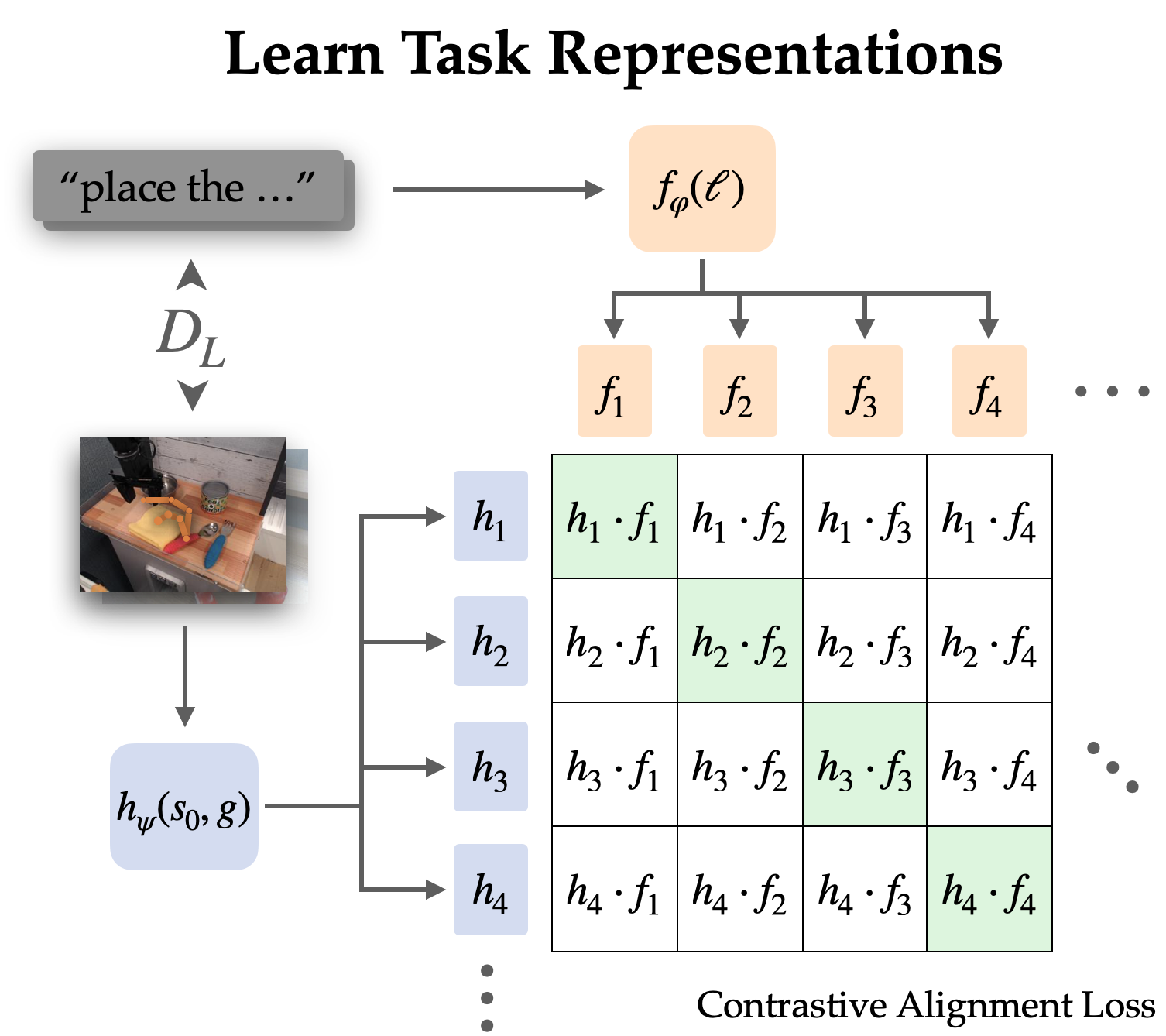

We explicitly align representations between target-conditioned duties and language-conditioned duties on labeled datasets by means of contrastive studying.

As a result of language typically describes relative modifications, we selected to align representations of state-goal pairs with language directions (relatively than simply aligning targets with language). Empirically, this additionally makes representations simpler to be taught, as they will omit a lot of the data within the picture and concentrate on the change from state to objective.

We be taught this alignment construction by means of directions from a labeled knowledge set and the picture’s infoNCE goal. We prepare twin picture and textual content encoders by contrastive studying of matched pairs of language and goal representations. This objective encourages excessive similarity between representations for a similar process and low similarity between representations for different duties, the place unfavorable exemplars are sampled from different trajectories.

When utilizing naive unfavorable sampling (per the remainder of the dataset), the realized illustration typically ignores the precise process and easily aligns directions and targets that reference the identical scene. To make use of this technique in the true world, it’s not very helpful to affiliate language with eventualities; as a substitute, we’d like it to disambiguate between completely different duties in the identical state of affairs. Due to this fact, we use a tough unfavorable sampling technique, the place as much as half of the unfavorable samples are sampled from completely different trajectories in the identical scene.

After all, this contrastive studying setting makes a mockery of pre-trained visible language fashions reminiscent of CLIP. They exhibit efficient zero-shot and few-shot generalization capabilities for visible language duties and supply a strategy to combine information from Web-scale pre-training. Nonetheless, most visible language fashions are designed to align a single static picture with its caption, fail to know modifications within the setting, and carry out poorly when consideration have to be paid to a single object in a cluttered scene.

To deal with these points, we design a mechanism to adapt and fine-tune CLIP to align process representations. We modified the CLIP structure to function on a pair of photographs at the side of early fusion (stacked by channel). This seems to be an initialization able to encoding state and goal picture pairs, and is especially good at preserving the pre-training advantages of CLIP.

Robotic Coverage Outcomes

For our predominant outcomes, we consider the GRIF technique on 15 duties in 3 eventualities in the true world. These directions have been chosen as a mix of directions that have been nicely represented within the coaching materials and novel directions that required a point of combinatorial generalization. One scene additionally options an unseen mixture of objects.

We evaluate GRIF to plain LCBC in addition to stronger baselines impressed by earlier work reminiscent of LangLfP and BC-Z. LLfP corresponds to joint coaching with LCBC and GCBC. BC-Z is an adaptation of the strategy of the identical identify to our setting, the place we prepare on LCBC, GCBC and a easy alignment time period. It optimizes the cosine distance loss between process representations and doesn’t use picture language pre-training.

These insurance policies are vulnerable to 2 predominant failure modes. They might not perceive verbal directions, inflicting them to attempt one other process or not carry out any helpful motion in any respect. When the language basis is weak, insurance policies could even provoke surprising duties after finishing the right ones, as a result of the unique directions are taken out of context.

Floor fault instance

“Put the mushrooms into the metallic pan”

“Put the spoon on the towel”

“Put the yellow bell pepper on the material.”

“Put the yellow bell pepper on the material.”

One other failure mode is the shortcoming to control objects. This may be attributable to failure to know, imprecise motion, or releasing the item on the unsuitable time. We observe that these should not inherent shortcomings of the robotic setting, because the GCBC coverage educated on the complete dataset can at all times function efficiently. Reasonably, this failure mode normally signifies inefficiency in using goal situation knowledge.

Examples of failed manipulation

“Transfer the bell pepper to the left of the desk”

“Put the inexperienced peppers into the pot”

“Transfer the towel subsequent to the microwave”

Evaluating the baselines, all of them undergo from each failure modes to various levels. LCBC depends solely on small labeled trajectory datasets, and its poor operational capabilities forestall it from finishing any mission. LLfP collectively trains insurance policies on labeled and unlabeled knowledge and exhibits important enhancements within the manipulation capabilities of LCBC. It achieves cheap success charges for frequent instructions, however can not assist extra advanced instructions. BC-Z’s alignment technique additionally improves operational capabilities, probably as a result of alignment improves transitions between modalities. Nonetheless, it’s nonetheless troublesome to generalize to new directions with out an exterior supply of visible language knowledge.

GRIF exhibits one of the best generalization potential and likewise has highly effective management capabilities. Although there could also be many various duties within the scene, it is ready to lay down the language directions and execute the duties. We present among the rollouts and corresponding directions beneath.

Insurance policies launched by GRIF

“Transfer the pot to the entrance”

“Put the inexperienced peppers into the pot”

“Put the knife on the purple fabric”

“Put the spoon on the towel”

in conclusion

GRIF permits robots to leverage massive quantities of unlabeled trajectory knowledge to be taught goal-conditioned insurance policies whereas offering a “linguistic interface” to those insurance policies by means of aligned language objective process representations. In distinction to earlier language-image alignment strategies, our illustration aligns state modifications to language, which we exhibit is a big enchancment over normal CLIP-style image-to-language alignment targets.Our experiments present that our methodology can successfully exploit unlabeled robotic trajectories, attaining important efficiency enhancements in comparison with baselines and strategies that solely use language annotation knowledge.

Our strategy has a lot of limitations that may be addressed in future work. GRIF is much less appropriate for duties the place the directions are extra about how you can full the duty relatively than what to do (e.g., “Pour the water slowly”) – such qualitative directions could require different kinds of alignment-loss execution that account for intermediate steps of the duty. GRIF additionally assumes that each one language bases come from the absolutely annotated portion of our dataset or from pre-trained VLMs. An thrilling path for future work is to increase our alignment loss to be taught wealthy semantics from internet-scale knowledge utilizing human video knowledge. This strategy can then use this knowledge to enhance the language basis past the robotic’s dataset and allow broadly common robotic methods that may comply with consumer directions.

This text relies on the next papers:

If GRIF impressed your work, please cite it as follows:

@inproceedings{myers2023goal,

title={Aim Representations for Instruction Following: A Semi-Supervised Language Interface to Management},

creator={Vivek Myers and Andre He and Kuan Fang and Homer Walke and Philippe Hansen-Estruch and Ching-An Cheng and Mihai Jalobeanu and Andrey Kolobov and Anca Dragan and Sergey Levine},

booktitle={Convention on Robotic Studying},

12 months={2023},

}