Diffusion fashions have just lately turn out to be the de facto normal for producing advanced, high-dimensional outputs. You might know them for his or her capacity to supply beautiful synthetic intelligence artwork and hyper-realistic artificial photos, however they’ve additionally discovered success in different functions akin to drug design and steady management. The important thing concept behind diffusion fashions is to iteratively convert random noise into samples, akin to photos or protein buildings. That is sometimes motivated as a most probability estimation drawback, the place a mannequin is skilled to supply samples that match the coaching materials as carefully as attainable.

Nonetheless, most use instances for diffusion fashions don’t instantly contain matching coaching knowledge, however as an alternative contain downstream objectives. We do not simply need a picture that appears like an present picture, however a picture that has a selected kind of look; we do not simply desire a bodily possible drug molecule, however we wish a drug that is as efficient as attainable molecular. On this article, we present use reinforcement studying (RL) to coach diffusion fashions instantly in opposition to these downstream objectives. To this finish, we fine-tune steady diffusion on quite a lot of objectives, together with picture compressibility, human-perceived aesthetic high quality, and cue picture alignment. The ultimate aim makes use of suggestions from a big visible language mannequin to enhance the mannequin’s efficiency beneath anomaly cues, demonstrating how highly effective synthetic intelligence fashions can be utilized to enhance one another with none human involvement.

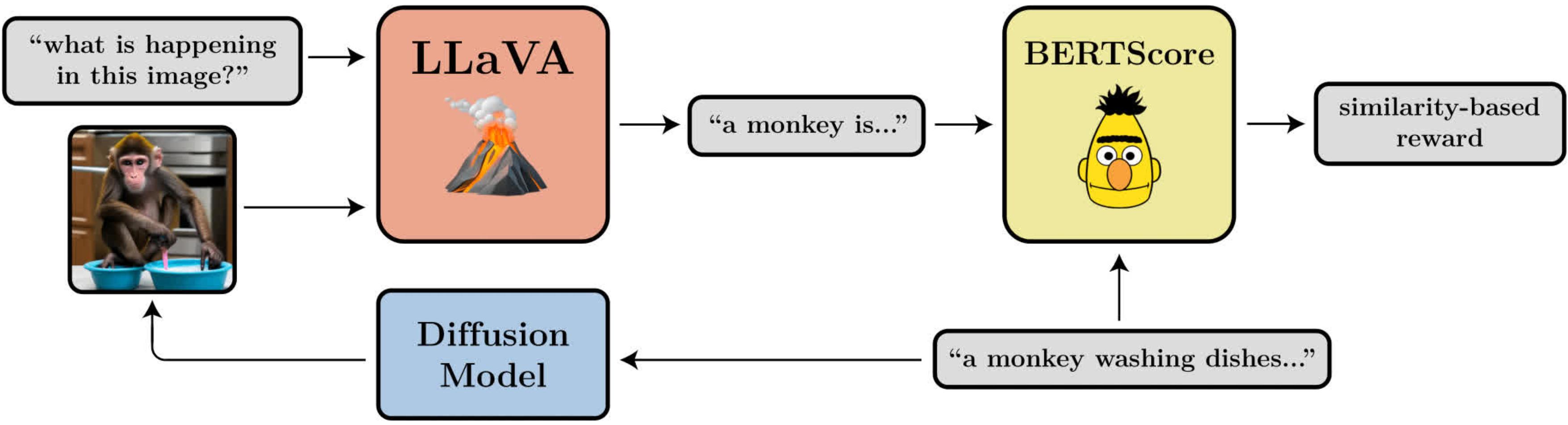

Diagram illustrating immediate picture alignment targets. It makes use of the massive visible language mannequin LLaVA to judge the generated photos.

Denoising diffusion technique optimization

When diffusion is reworked right into a reinforcement studying drawback, we solely take advantage of primary assumptions: given a pattern (akin to a picture), we now have entry to a reward operate, which we will consider to inform us how “good” the pattern is. Our aim is to have the diffusion mannequin produce samples that maximize this reward operate.

Diffusion fashions are sometimes skilled utilizing a loss operate derived from most probability estimation (MLE), which signifies that they’re inspired to supply samples that make the coaching materials look extra probably. In a reinforcement studying setting, we not have coaching knowledge, solely samples of the diffusion mannequin and their related rewards. A method we will nonetheless use the identical MLE-driven loss operate is to deal with the samples as coaching knowledge and mix the rewards by weighting the loss in response to the reward of every pattern. This offers us an algorithm we name reward-weighted regression (RWR), named after an present algorithm within the RL literature.

Nonetheless, there are some issues with this method. One is that RWR isn’t a very exact algorithm—it solely roughly maximizes rewards (see Nair et al., Appendix A). The MLE-inspired diffusion loss can be not exact and is as an alternative derived utilizing a variational sure on the true probability of every pattern. Because of this RWR maximizes rewards via two ranges of approximation, which we discovered drastically hurts its efficiency.

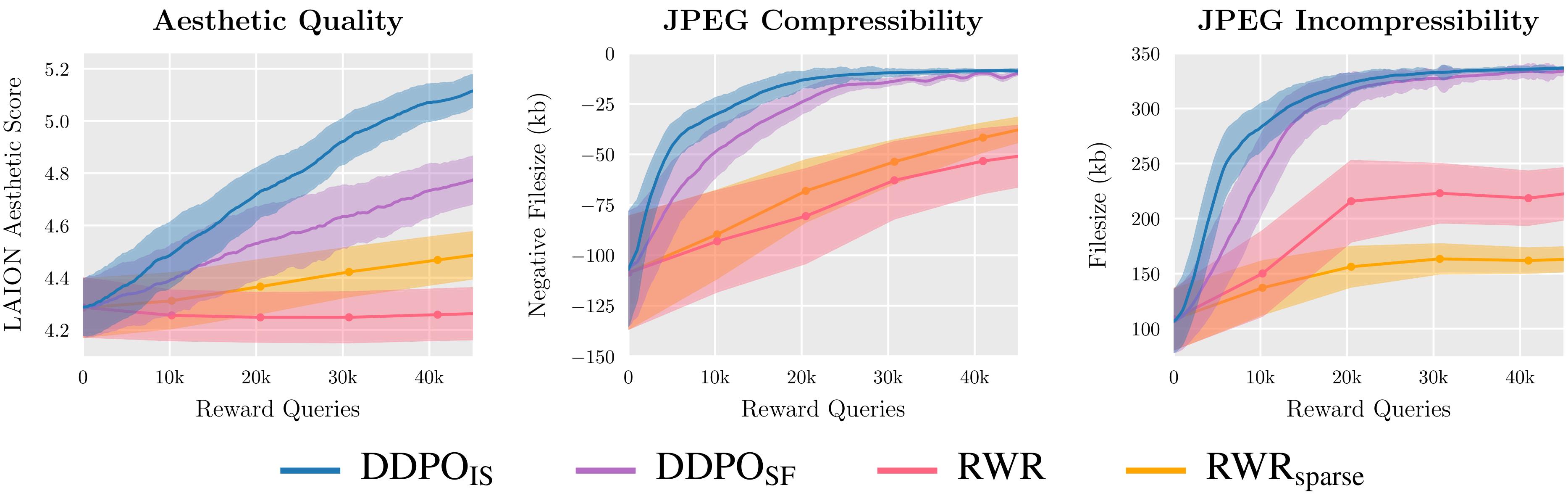

We consider two variants of DDPO and two variants of RWR on three reward features and discover that DDPO all the time achieves the perfect efficiency.

The important thing perception of our algorithm, which we name Denoising Diffusion Coverage Optimization (DDPO), is that we’re higher in a position to maximize the reward for the ultimate pattern if we give attention to your entire sequence of denoising steps to attain this aim . To this finish, we reconstruct the diffusion course of as a multi-step Markov resolution course of (MDP). In MDP phrases: every denoising step is an motion, and the agent solely will get rewarded on the final step of every denoising trajectory when the ultimate pattern is produced. This framework permits us to use many highly effective algorithms from the reinforcement studying literature which might be particularly designed for multi-step MDPs. These algorithms don’t use the approximate probability of the ultimate pattern, however the precise probability of every denoising step, which may be very straightforward to compute.

We selected to use coverage gradient algorithms due to their ease of implementation and previous success in language mannequin fine-tuning. This resulted in two variants of DDPO: DDPOSF Specificwhich makes use of a easy rating operate estimator of the coverage gradient, also called REINFORCE; and DDPOsure, which makes use of a extra highly effective significance sampling estimator. DDPOsure is our greatest performing algorithm, its implementation carefully follows Proximal Coverage Optimization (PPO).

High quality-tuning steady diffusion utilizing DDPO

For our primary outcomes, we use DDPO to fine-tune steady diffusion v1-4sure. We’ve got 4 duties, every outlined by a unique reward operate:

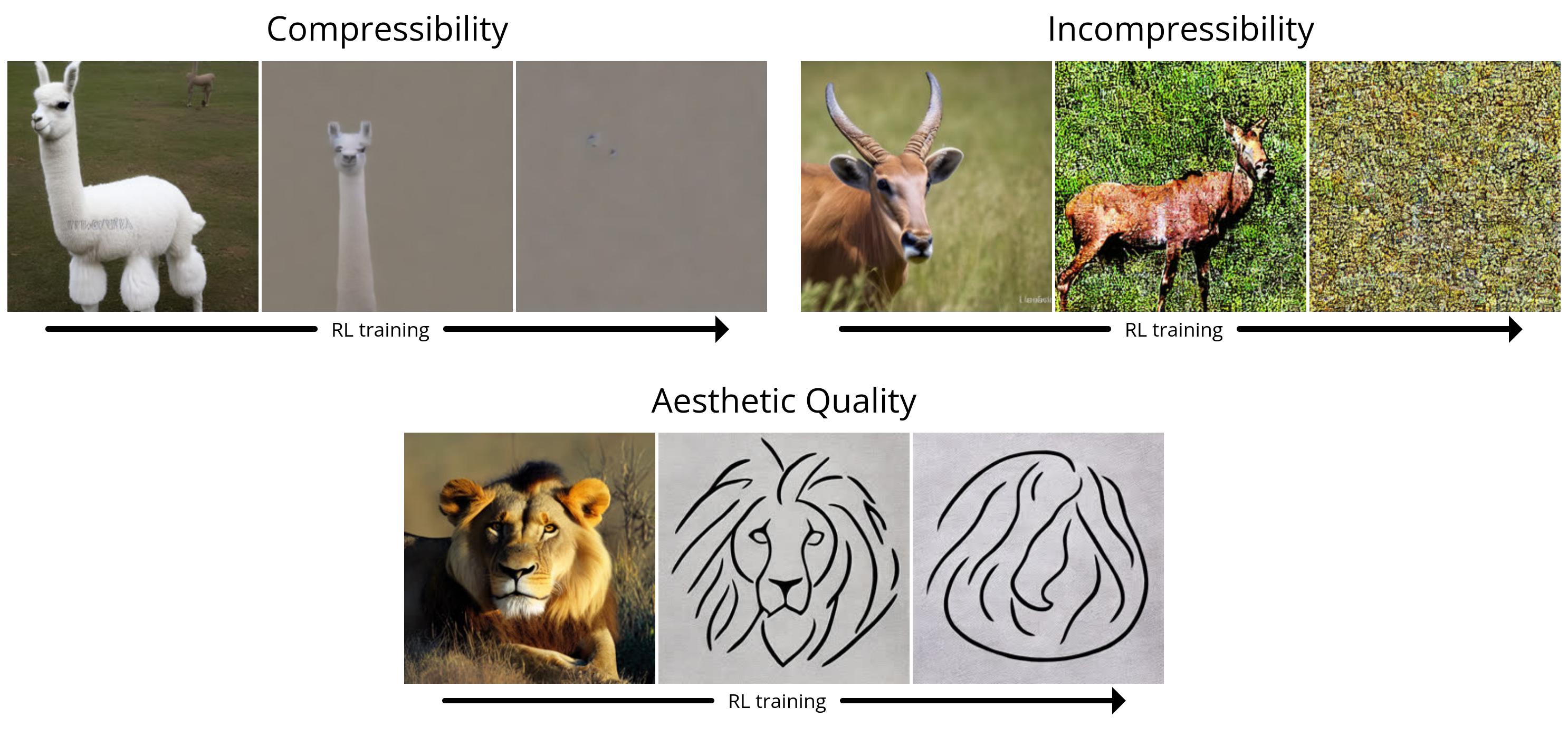

- Compressibility: How straightforward is it to compress photos utilizing the JPEG algorithm? The bonus is the unfavorable file measurement (in kB) of the picture when saved as JPEG.

- Incompressibility: How tough is it to compress photos utilizing the JPEG algorithm? The bonus is the optimistic file measurement of the picture in kB when saved as JPEG.

- Aesthetic high quality: How lovely does the picture seem to the human eye? The reward is the output of the LAION aesthetic predictor, a neural community skilled on human preferences.

- Immediate picture alignment: Does the picture properly mirror what’s required within the immediate? This one is a bit sophisticated: we feed the picture into LLaVA, ask it to explain the picture, after which use BERTScore to calculate the similarity between that description and the unique immediate.

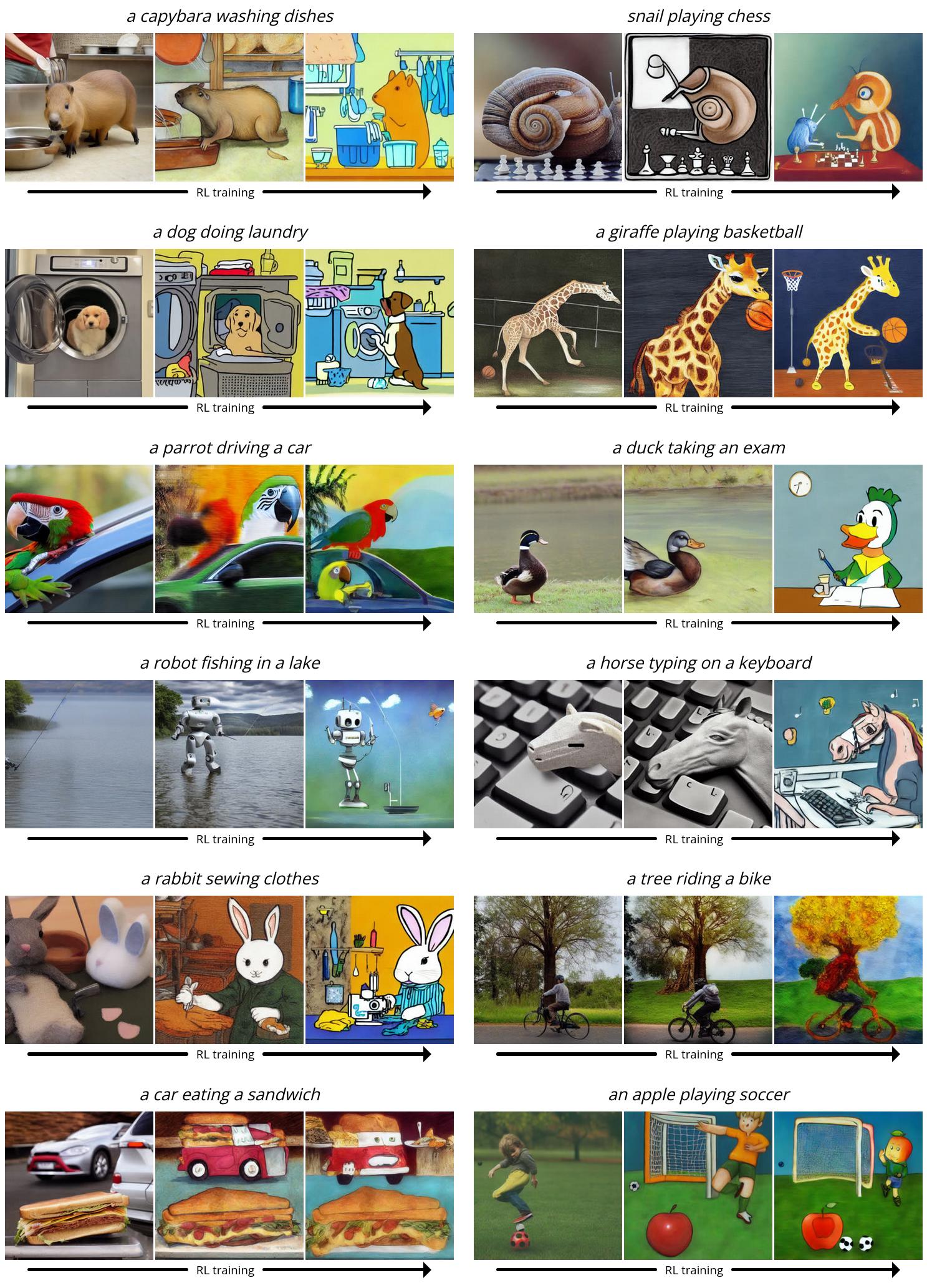

Since steady diffusion is a text-to-image mannequin, we additionally want to decide on a set of cues to present it throughout fine-tuning.For the primary three duties, we use easy prompts of the shape “one) [animal]”.For trace picture alignment, we use hints of the shape “one) [animal] [activity]”the place the exercise is “do the washing up”, play chessand “Biking”. We discover that steady diffusion usually struggles to supply photos that match the cues of those uncommon scenes, leaving a number of room for enchancment in RL fine-tuning.

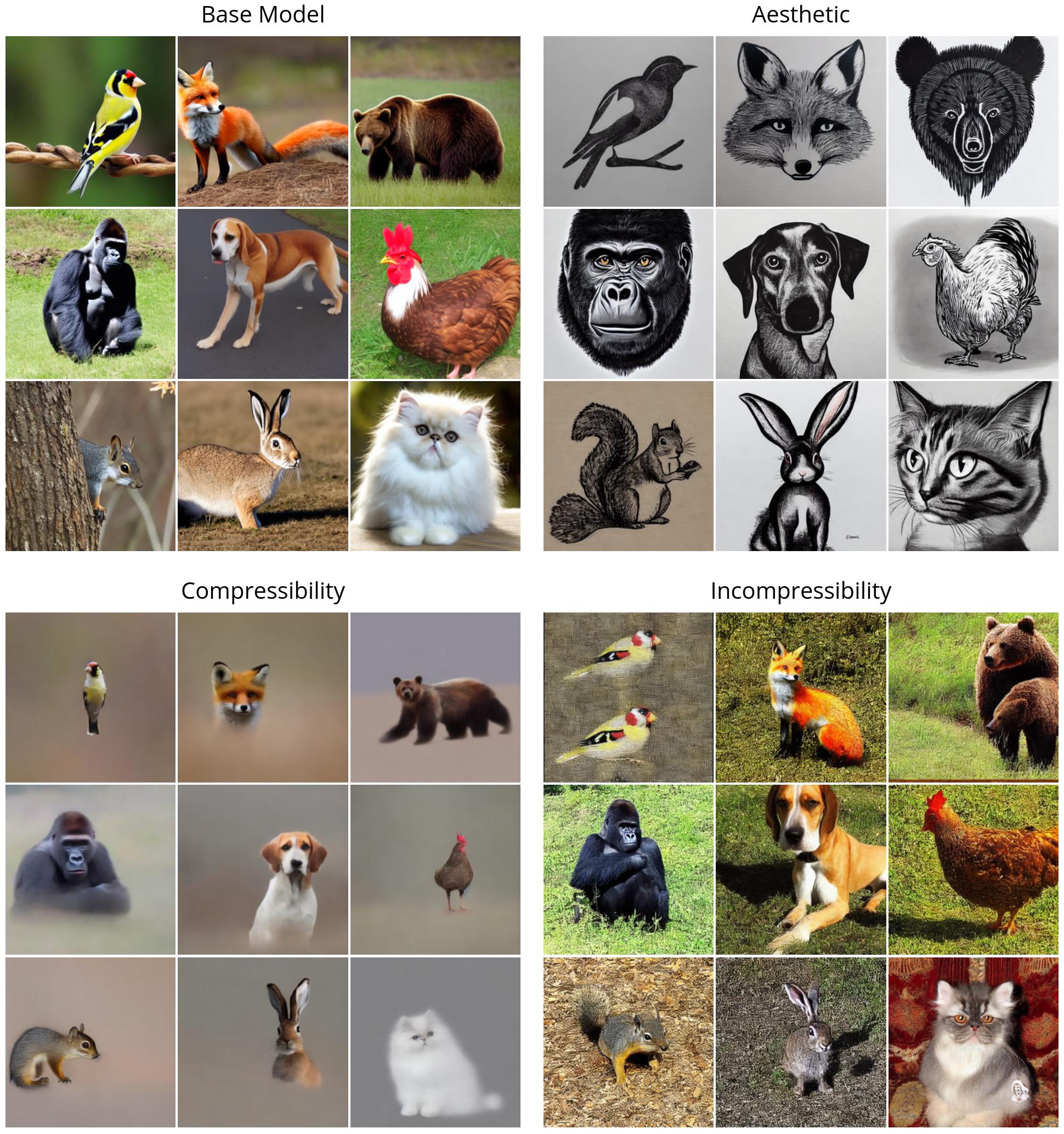

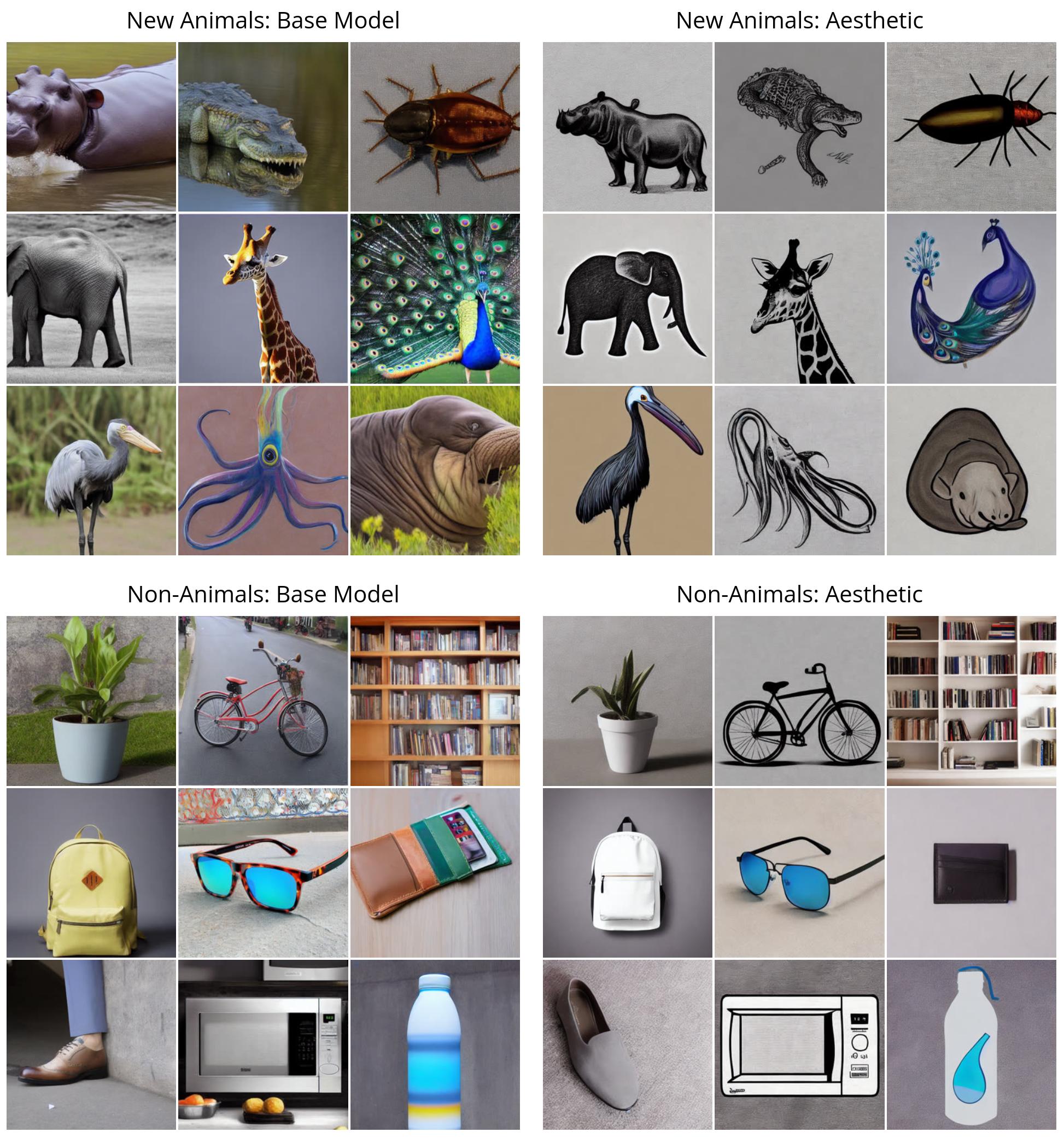

First, we illustrate the efficiency of DDPO on easy rewards (compressibility, incompressibility, and aesthetic qualities). All photos are generated utilizing the identical random seed. Within the higher left quadrant, we illustrate what “odd” steady diffusion produces for 9 completely different animals; all RL fine-tuned fashions present clear qualitative variations. Apparently, the aesthetic high quality mannequin (prime proper) favors easy black and white line drawings, revealing the kinds of photos that LAION’s aesthetic forecasters deem “extra lovely.”

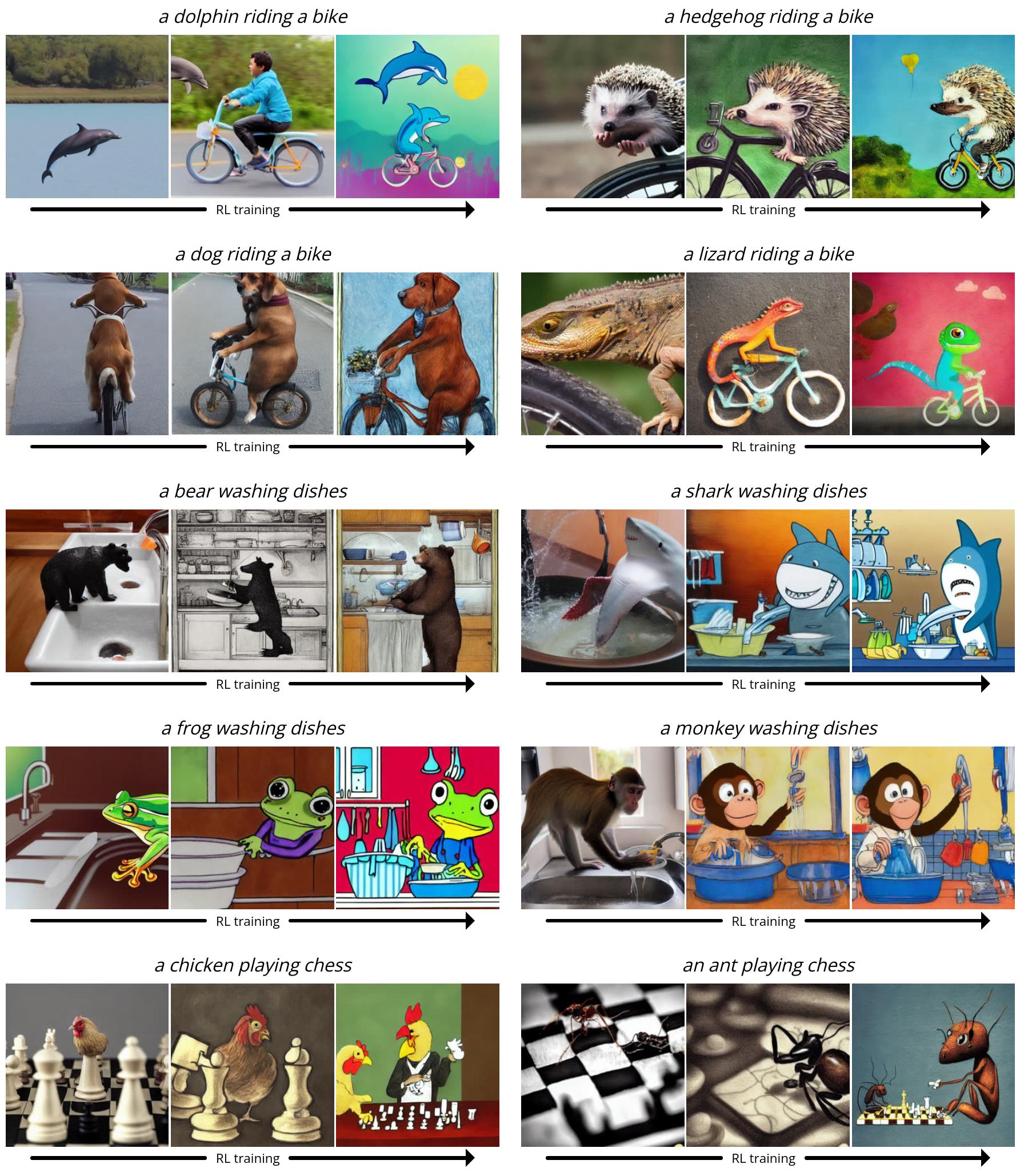

Subsequent, we display DDPO on a extra advanced cued picture alignment activity. Right here we present a number of snapshots from the coaching course of: three photos per sequence exhibiting samples of the identical cues and random seeds over time, the primary pattern being from vanilla steady diffusion. Apparently, the mannequin shifted in the direction of a extra cartoonish model, which was not intentional. We hypothesize that it is because animals performing human-like actions within the pre-training knowledge usually tend to seem in a cartoon-like model, so the mannequin shifts to this model to extra simply align with the cues by leveraging what it already is aware of. .

sudden generalization

When utilizing RL to fine-tune massive language fashions, we found stunning generalizations: for instance, the mannequin was solely fine-tuned for English instruction monitoring Often improved in other languages. We discovered that the identical phenomenon happens with text-to-image diffusion fashions. For instance, our aesthetic high quality mannequin was fine-tuned utilizing cues chosen from a listing of 45 frequent animals. We discovered that it really works not solely with invisible animals, but in addition with on a regular basis objects.

Our cued picture alignment mannequin used the identical listing of 45 frequent animals and solely three actions throughout coaching. We discovered that it really works not only for unseen animals, but in addition for unseen actions, and even novel mixtures of the 2.

Over-optimization

It’s identified that fine-tuning the reward operate (particularly the educational operate) can result in reward over-optimization, the place the mannequin exploits the reward operate to attain excessive rewards in an unhelpful manner. Our setting isn’t any exception: in all duties, the mannequin ultimately destroys any significant picture content material to maximise the reward.

We additionally discovered that LLaVA is weak to printing assaults: when optimizing alignment for type prompts “[n] animal”DDPO was in a position to efficiently spoof LLaVA by producing textual content that was loosely just like the proper quantity.

There may be at present no common method to stopping over-optimization, and we spotlight this problem as an vital space for future work.

in conclusion

Diffusion fashions are exhausting to beat when producing advanced, high-dimensional output. Nonetheless, up to now they’ve been largely profitable in functions the place the aim is to be taught patterns from massive quantities of information, akin to image-caption pairs. We found a technique to effectively practice diffusion fashions in a manner that goes past sample matching and doesn’t essentially require any coaching materials. The probabilities are restricted solely by the standard and creativity of the reward operate.

The way in which we use DDPO on this work is impressed by latest profitable language mannequin fine-tuning. OpenAI’s GPT fashions, akin to Steady Diffusion, are first skilled primarily based on a considerable amount of community knowledge; then they’re fine-tuned utilizing reinforcement studying to supply helpful instruments akin to ChatGPT.Usually their reward features are realized primarily based on human preferences, however different features have extra recent Work out use reward options primarily based on AI suggestions to supply highly effective chatbots. In comparison with chatbot regimes, our experiment is smaller and restricted in scope. However given the large success of this “pre-training + fine-tuning” paradigm in language modeling, it does appear worthy of additional pursuit on the earth of diffusion fashions. We hope that others will construct on our work to enhance large-scale diffusion fashions not just for text-to-image era, but in addition for a lot of thrilling functions akin to film era, music era, picture enhancing, protein synthesis, robotics, and so on. .

As well as, the “pre-training + fine-tuning” paradigm isn’t the one manner to make use of DDPO. So long as you will have a great reward operate, there’s nothing stopping you from coaching with RL from the beginning. Whereas this setting has but to be explored, that is the place DDPO actually shines. Pure reinforcement studying has lengthy been utilized in every little thing from sport enjoying to robotic operation, from nuclear fusion to chip design. Including the highly effective expressive energy of diffusion fashions to the combination makes it attainable to take present reinforcement studying functions to the subsequent stage and even uncover new ones.

This text is predicated on the next papers:

If you wish to be taught extra about DDPO, you may view papers, web sites, supply code, or get mannequin weights on Hugging Face. If you wish to use DDPO in your individual venture, take a look at my PyTorch + LoRA implementation the place you may fine-tune steady diffusion utilizing lower than 10GB of GPU reminiscence!

If DDPO has impressed your work, please cite:

@misc{black2023ddpo,

title={Coaching Diffusion Fashions with Reinforcement Studying},

writer={Kevin Black and Michael Janner and Yilun Du and Ilya Kostrikov and Sergey Levine},

yr={2023},

eprint={2305.13301},

archivePrefix={arXiv},

primaryClass={cs.LG}

}